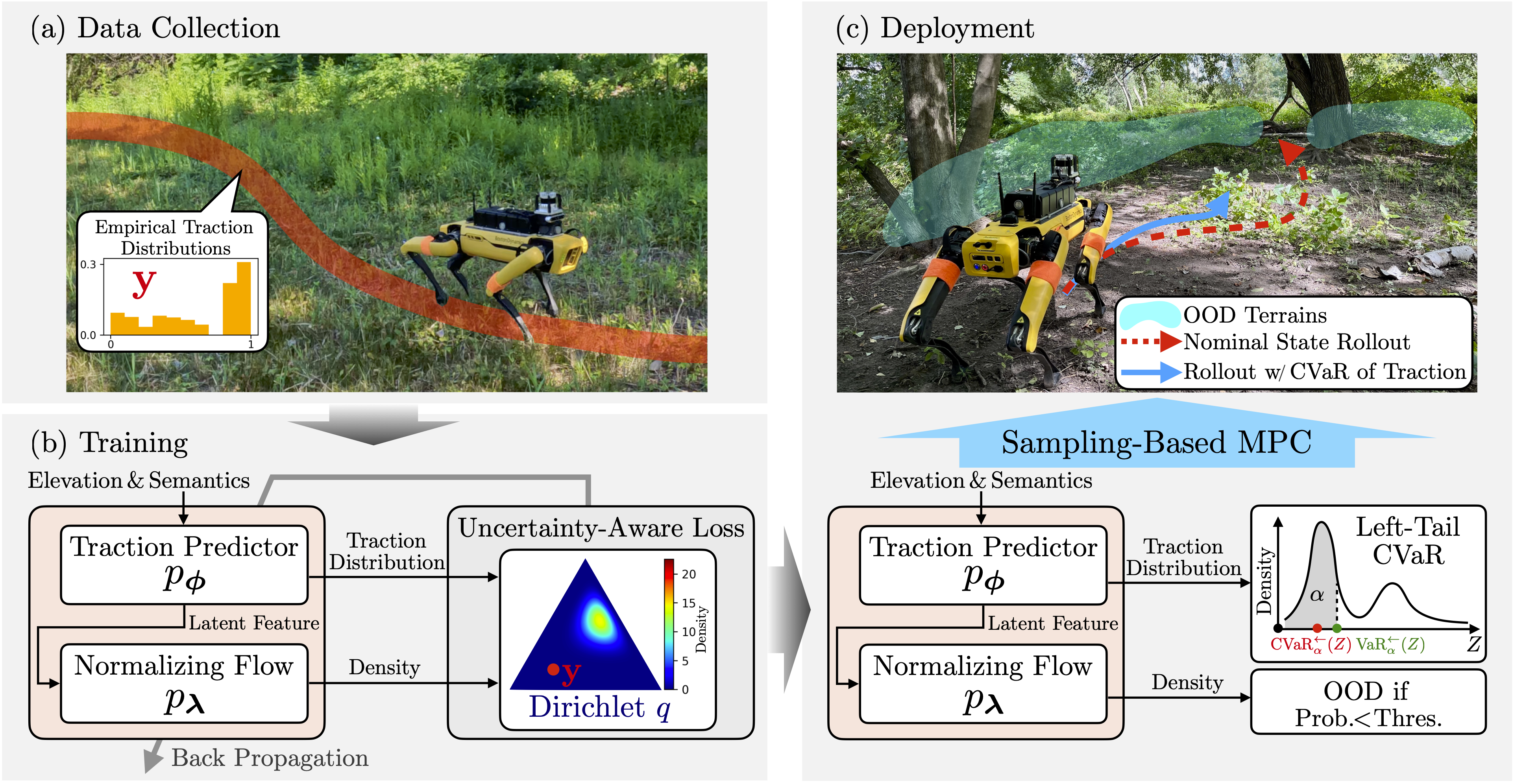

Traversing terrain with good traction is crucial for achieving fast off-road navigation. Instead of manually designing costs based on terrain features, existing methods learn terrain properties directly from data via self-supervision to automatically penalize trajectories moving through undesirable terrain, but challenges remain in properly quantifying and mitigating the risk due to uncertainty in the learned models. To this end, we present evidential off-road autonomy (EVORA), a unified framework to learn uncertainty-aware traction model and plan risk-aware trajectories. For uncertainty quantification, we efficiently model both aleatoric and epistemic uncertainty by learning discrete traction distributions and probability densities of the traction predictor’s latent features. Leveraging evidential deep learning, we parameterize Dirichlet distributions with the network outputs and propose a novel uncertainty-aware squared Earth Mover’s Distance loss with a closed-form expression that improves learning accuracy and navigation performance. For risk-aware navigation, the proposed planner simulates state trajectories with the worst-case expected traction to handle aleatoric uncertainty and penalizes trajectories moving through terrain with high epistemic uncertainty. Our approach is extensively validated in simulation and on wheeled and quadruped robots, showing improved navigation performance compared to methods that assume no slip, assume the expected traction, or optimize for the worst-case expected cost.

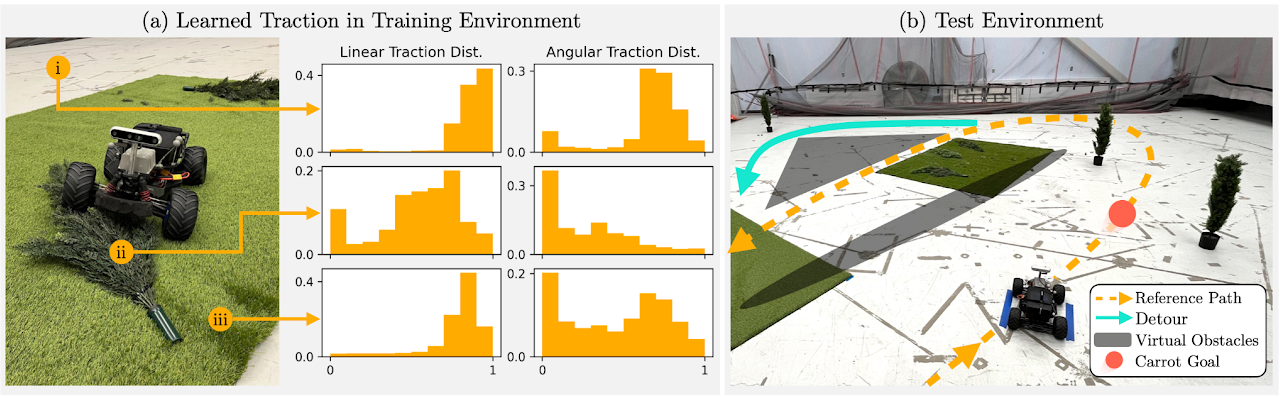

The training and test environments used for the indoor racing experiments. Note that the bi-modality of traction distribution over the vegetation could cause the robot to slow down significantly. During testing, the robot was tasked to drive two laps following a carrot goal along the reference path while deciding between a detour without vegetation and a shorter path with vegetation.

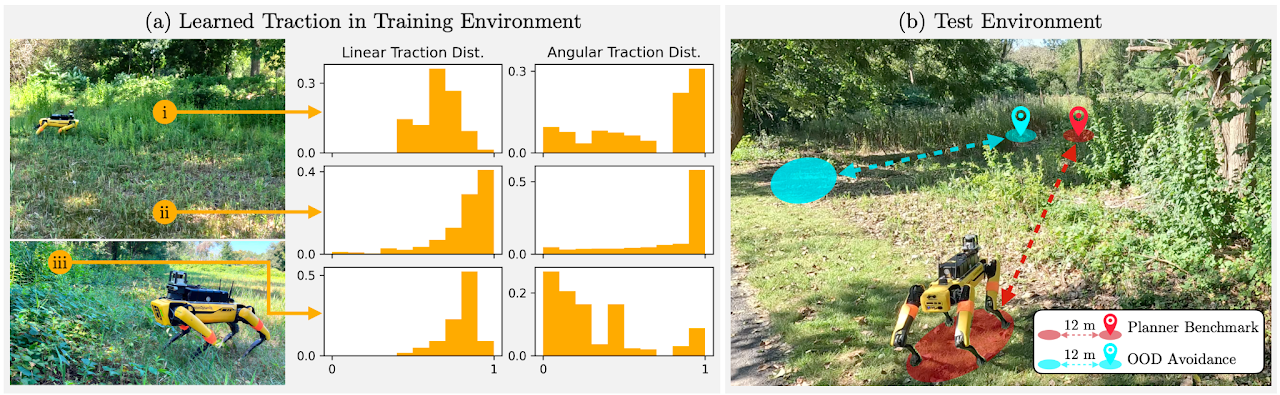

The outdoor environment consisted of vegetation terrains with different heights and densities. Unlike wheeled robots, a legged robot typically has good linear traction through vegetation, but angular traction may exhibit multi-modality due to the greater difficulty of turning. During testing, two start-goal pairs were used to benchmark the planners and analyze the benefits of avoiding OOD terrains.

@article{cai2024evora,

title={{EVORA: Deep Evidential Traversability Learning for Risk-Aware Off-Road Autonomy}},

author={Cai, Xiaoyi and Ancha, Siddharth and Sharma, Lakshay and Osteen, Philip R and Bucher, Bernadette and Phillips, Stephen and Wang, Jiuguang and Everett, Michael and Roy, Nicholas and How, Jonathan P},

journal={IEEE Transactions on Robotics},

volume={40},

pages={3756-3777},

year={2024}

}